Contents

- Artificial Intelligence

- Neural Networks

- Machine Learning

- The LeNet Neural Network

- LeNet Training

- ChatGPT

- GPT-2

- Gemini 2.5 Pro

Artificial Intelligence

- Artificial Intelligence (AI) is the project of developing computer systems and robots capable of intelligent behavior, such as reasoning, learning, using language, and problem solving. The project began in 1957 at a workshop held on the campus of Dartmouth College.

- Perhaps the most successful AI development has been neural networks trained through machine learning

- ChatGPT, for example, is a chatbot that uses the GPT neural network.

Neural Networks

- Humans are good at some things that computers traditionally are not. For example identifying hand-written digits.

- There’s no simple, neat algorithm for identifying hand-written digits like these. You might be able to develop a program by trial and error, but the result would be a kluge.

- But over decades an ingenious idea emerged: a computer program that mimics how the brain learns.

- The human brain is a network of 86 billion neurons connected at synapses to other neurons, sensory cells, and muscles.

- As people learn, synaptic connections between neurons change, thereby “storing” new information. This is neural plasticity.

- The analog for computer software is a program that can be trained, modifying itself in light of what it learns.

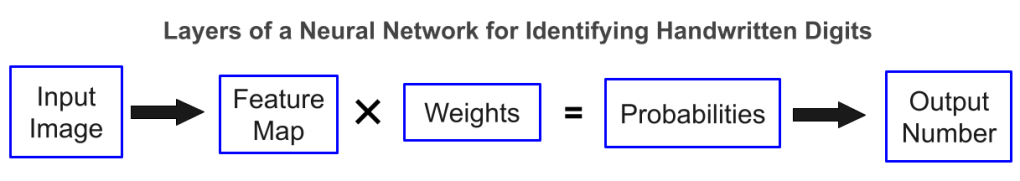

- Here’s a simplified layout of a neural network program for identifying hand-written digits.

- Input Image

- The program first reads an image of a hand-written digits, e.g.

- The program first reads an image of a hand-written digits, e.g.

- Feature Map

- The program analyzes the digit, pixel by pixel, resulting in a sequence of 800 numbers that captures the main features of the digit. Then it multiplies this Feature Map by a block of numbers called Weights – the core of the neural network.

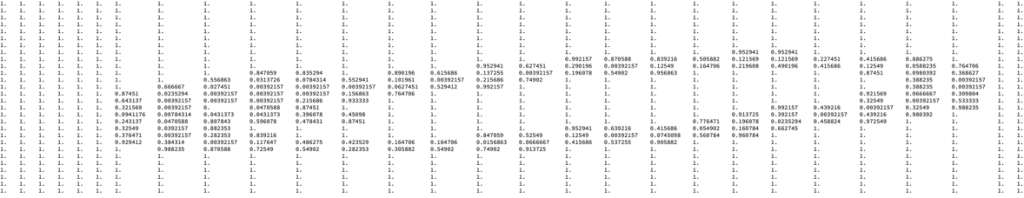

- Weights

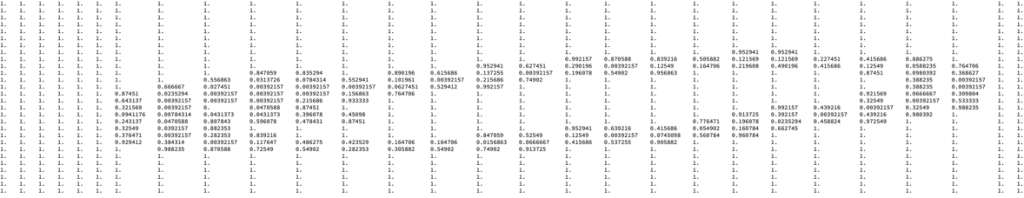

- The weights, which can be represented as a spreadsheet of numbers with 10 columns and 800 rows, are the analog of synaptic connections in the brain.

- The brain learns by changing itself – by altering its synaptic connections.

- A neural network also learns by changing itself – by altering its weights.

- The weights, which can be represented as a spreadsheet of numbers with 10 columns and 800 rows, are the analog of synaptic connections in the brain.

- Probabilities

- The product of the Feature Map times the Weights (using what’s called matrix multiplication) is a sequence of 10 numbers, the Probabilities that the hand-written digit is 0, 1, 2, 3, …, and 9

- Output

- The program outputs the number with the highest probability.

Machine Learning

- The values of the weights result from machine learning, a process through which the network is taught by a training program. Here’s how the digit-identifying network is trained.

- The training program (TP) first sets the weights to random numbers.

- Then the TP begins the training process, which comprises:

- Feeding the network an image of a hand-written digit, for example

- Running the network and getting a result

- Seeing if the result is correct.

- If the output is wrong, adjusting the weights so the network is slightly more likely to get the correct answer the next time it sees the same image.

- Feeding the network an image of a hand-written digit, for example

- The process is repeated thousands of times with different digits.

- Amazingly, the network gets better and better at identifying hand-written digits, ultimately approaching a hit rate of close to 100 percent.

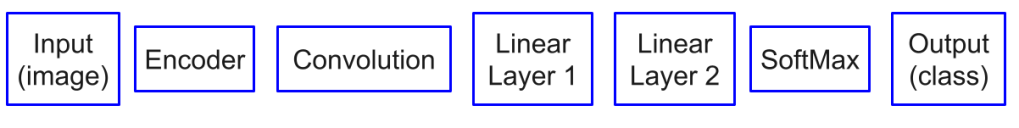

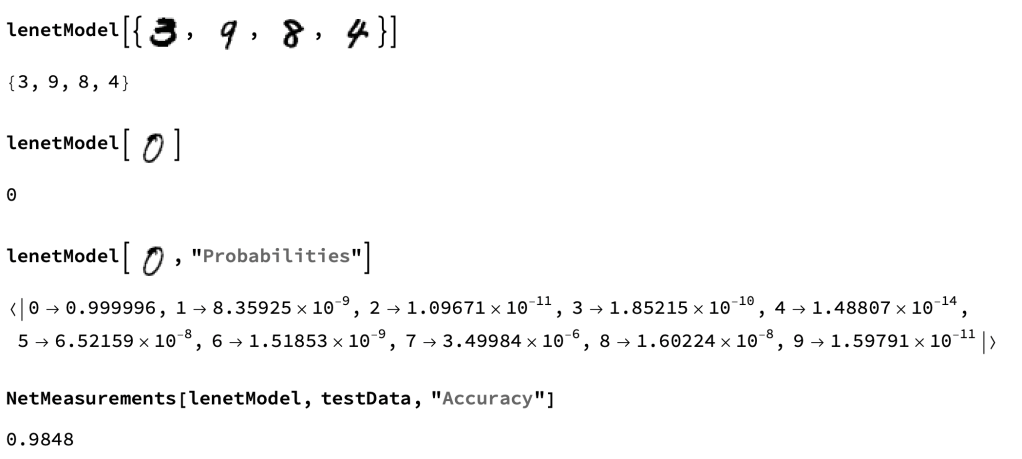

The LeNet Neural Network

- In 1998 Yann LeCun and his colleagues at Bell Labs developed the classic LeNet Neural Network for Identifying hand-written digits.

- In 2018 LeCun received the A.M.Turing Award for “for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing.”

LeNet’s layers are:

- The Input Layer reads hand-written digit.

- The Encoding Layer converts the image’s pixels to numbers, where 1 signifies a white pixel, 0 a black pixel, and any number between some shade of gray.

- The Convolution Layer develops a feature map of the image, a list of 800 numbers.

- Linear Layer 1 multiples the 800 numbers by a huge spreadsheet of 400,000 numbers with 800 columns and 500 rows. The numbers in the spreadsheet, run from -2.33753 to 1.53562, are weights, computed by the LeNet training program. The result is a list of 500 numbers.

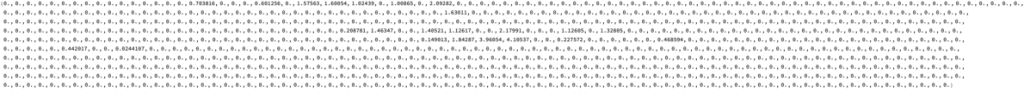

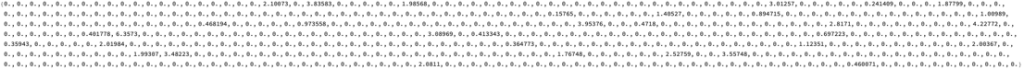

- Linear Layer 2 multiples the 500 numbers from Linear Layer 1 by a smaller spreadsheet of 5,000 numbers with 500 columns and 10 rows. The numbers in the spreadsheet, which run from -1.17305 to 0.885933, are a second set of weights, again computed by the LeNet training program. The result is a list of 10 numbers:

- 12.6278, -5.8821, -12.4399, -9.37623, -18.9297, -3.63099, -7.21256, 0.0864815, -5.29959, -12.1295

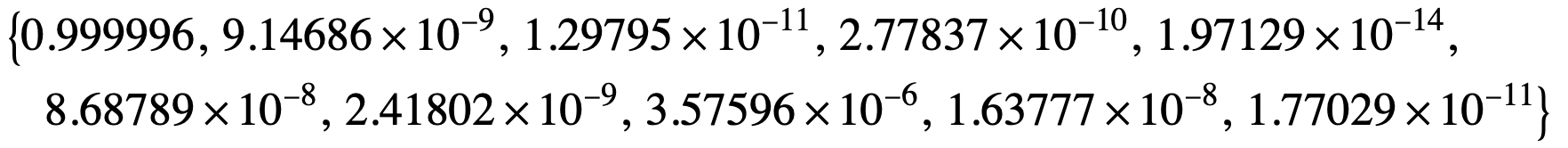

- The SoftMax Layer converts the 10 numbers of Linear Layer 2 into probabilities, i.e. probabilities that the image is a 0, 1, 2, 3, 4, 5, 6, 7, 8, or 9 respectively.

- The Output Layer outputs the digit with the highest probability.

- 0.999996 being way higher than the others, the Output Layer is 0.

LeNet Training

MNIST

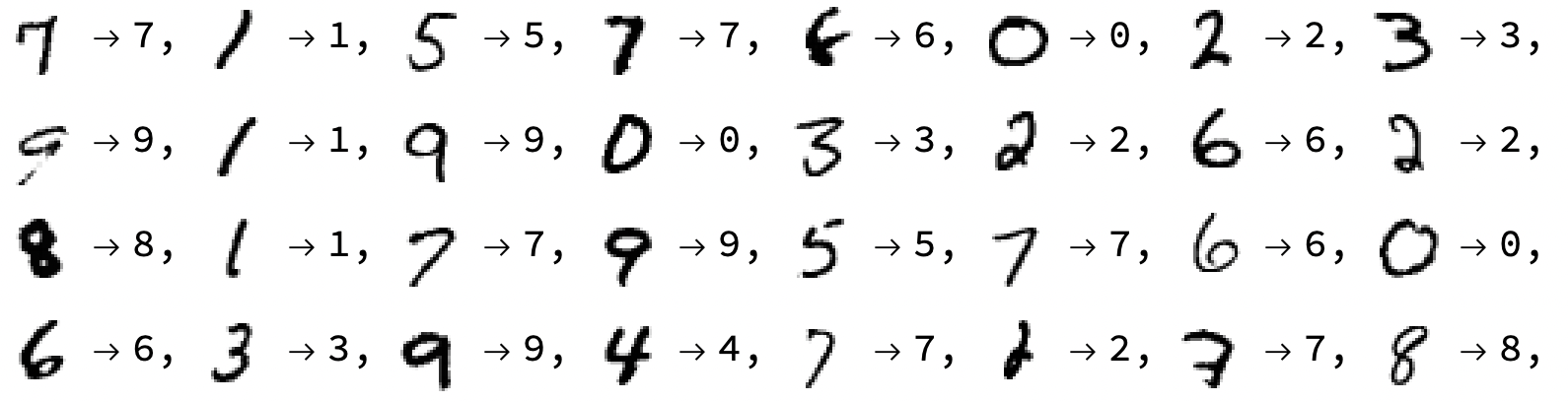

- The LeNet Network was trained on the MNIST database of 60,000 hand-written digits. A

sample:

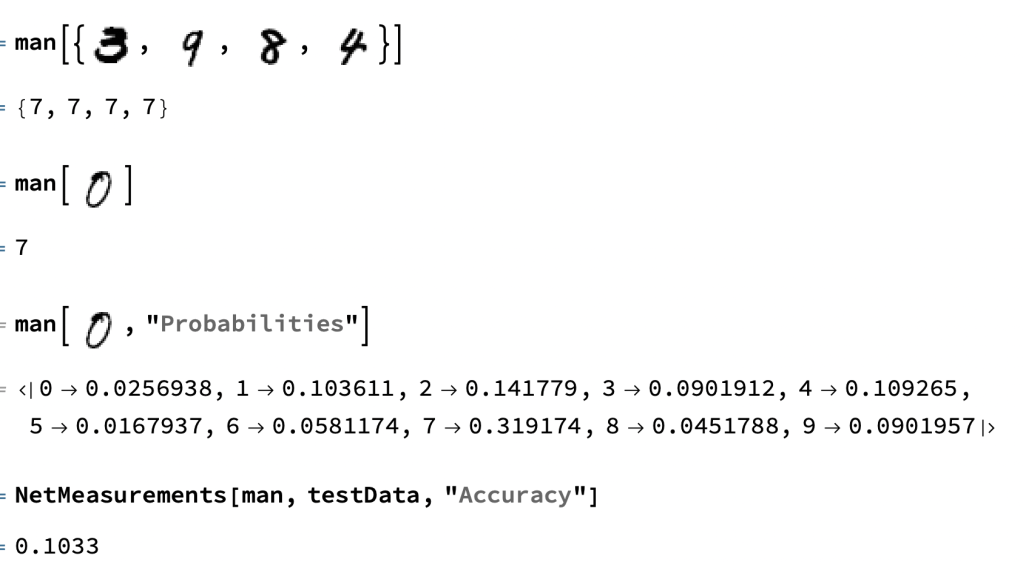

LeNet initialized with random weights but untrained

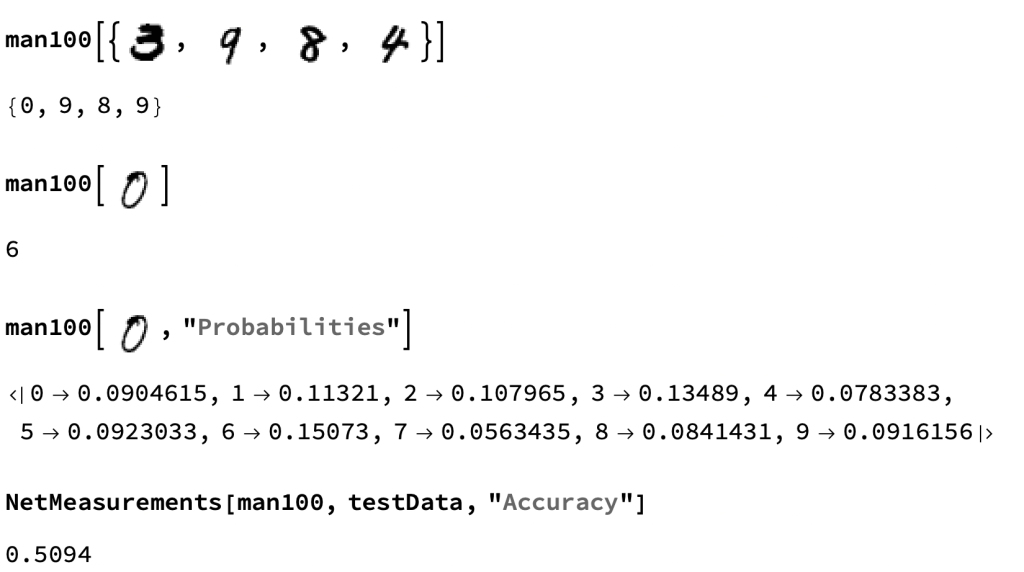

LeNet trained on 100 records

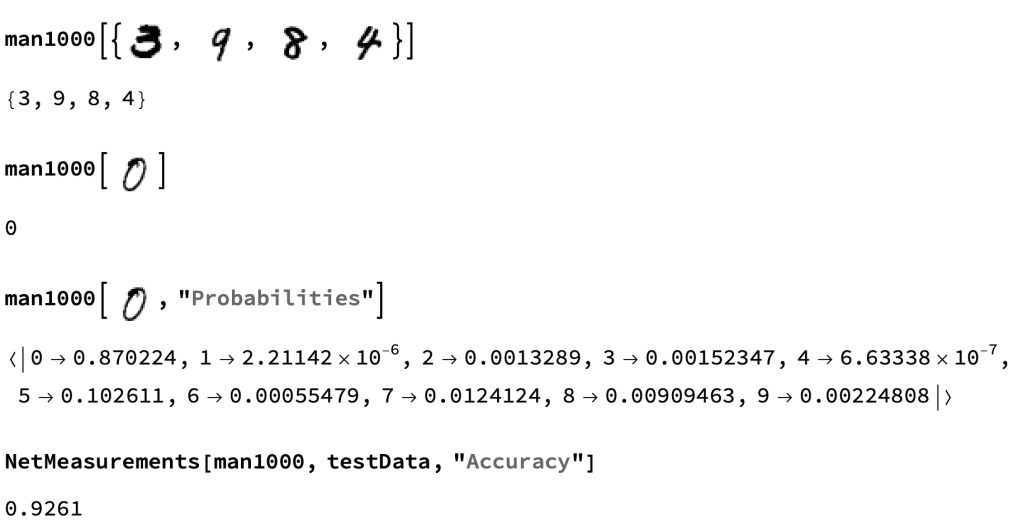

LeNet trained on 1,000 records

LeNet trained on 60,000 records

ChatGPT

- Website

- ChatGPT is a chatbot that uses GPT (Versions 3, 4, 5, etc) to generate text in response to a user’s question or request.

- GPT is a neural network that predicts the next word of a given piece of text. It’s trained on vast amounts of text, from books, articles, and web pages.

- GPT stands for Generative Pre-trained Transformer

- Generative means it generates things, e.g. explanations and code

- Pre-trained means that it’s been trained on huge amounts of text.

- Transformer is a program that translates text into blocks of numbers in a process akin to diagramming a sentence.

- GPT stands for Generative Pre-trained Transformer

- A chatbot is a software application that converses with humans.

- GPT is a neural network that predicts the next word of a given piece of text. It’s trained on vast amounts of text, from books, articles, and web pages.

- From What Is ChatGPT Doing … and Why Does It Work? Stephen Wolfram

- The first thing to explain is that what ChatGPT is always fundamentally trying to do is to produce a “reasonable continuation” of whatever text it’s got so far, where by “reasonable” we mean “what one might expect someone to write after seeing what people have written on billions of webpages, etc.”

- And the remarkable thing is that when ChatGPT does something like write an essay what it’s essentially doing is just asking over and over again “given the text so far, what should the next word be?”—and each time adding a word.

- ChatGPT: Complete this sentence “A rational person believes things based on”

- A rational person believes things based on evidence, sound reasoning, and consistency with known facts.

- ChatGPT: Complete this sentence “A rational person makes decisions based on”

- A rational person makes decisions based on evidence, logical reasoning, and a careful evaluation of consequences.

GPT-2

- GPT-2, like its successors GPT-3, GPT-3.5, and GPT-4 is a neural network that generates the most likely next word of a piece of text. For example:

- gpt2[“beyond a reasonable”] = ” doubt”

- gpt2[“A reasonable person makes decisions based on the”] = ” facts”

- gpt2[“There is no evidence that the”] = “government”

- gpt2[“A fool and his money are soon”] = “to”

- GPT-2 also yields top probabilities

- gpt2[“A reasonable person makes decisions based on the”,”TopProbabilities”]

- facts->0.145321, information->0.0767478, evidence->0.050299, best->0.0442332, totality->0.0331178, circumstances->0.0218757, needs->0.016926, advice->0.0157917, interests->0.0152948

- gpt2[“A fool and his money are soon”, “TopProbabilities”]

- to->0.115383, gone->0.0505349, in->0.0406264, on->0.0286801, at->0.026871, out->0.0235931, lost->0.0231029, going->0.016185, coming->0.0126313, thrown->0.0119907, found->0.011662, taken->0.0116561, forgotten->0.0115758

- gpt2[“A reasonable person makes decisions based on the”,”TopProbabilities”]

- The top layers of GPT-2:

- Input

- Embedding

- Transformer

- Classifier

- Probabilities

- Output

- Input Layer

- The Input Layer tokenizes and indexes input text, for example

- Text: “beyond a reasonable”

- Tokenization: {“be”, “yond”, ” a”, ” reasonable”}

- Token indices: {1095, 2988, 2, 6142}

- The Input Layer tokenizes and indexes input text, for example

- Embedding Layer

- The Embedding Layer provides a 768-number embedding vector for each token.

- An embedding vector quantifies the meaning of a word so that the more words are alike in meaning, the closer their vectors.

- Thus the vectors for “house” and “apartment” are closer than those for “God” and “probable.”

- Transformer Layer

- This complex layer of 13 modules, the core of GPT-2, transforms the embeddings of the input tokens into an embedding for the next word.

- Classifier

- The Classifier Layer uses a 50257 x 768 matrix of weights to convert the 768-number embedding of the next word into a vector corresponding to GPT’s vocabulary of 50,257 tokens.

- Probabilities Layer

- This layer converts the 50,257 entries from the Classifier Layer to 50,257 probabilities, one for each token in GPT’s token vocabulary.

- For the input text “beyond a reasonable” the largest probability is 0.764874, with token index 4464.

- The probabilities appropriately sum to 1.

- Output Layer

- The Ouput Layer outputs the token with index 4464: ” doubt”

- GPT-2 uses a vocabulary of 50,257 indexed tokens. Here’s a random sample:

- ‘artment’, 1568

- ‘Reb’, 28696

- ‘ Laurel’, 43187

- ‘ Doug’, 14860

- ‘ Observatory’, 25503

- ‘ SIL’, 47296

- ‘ interests’, 5098

- ‘ty’, 519

- ‘ confuse’, 27276

- ‘ Supply’, 22408

- ‘Bob’, 18606

- ‘ye’, 5693

- ‘NING’, 15616

- ‘fully’, 2504

- ‘ medium’, 6835

- ‘ancing’, 4822

- ‘Chall’, 41557

- ‘Bad’, 21814

- ‘avering’, 42355

- ‘Quick’, 20808

Gemini 2.5 Pro

- Website

- Complete this sentence “A rational person believes things based on”

- A rational person believes things based on evidence and reason.

- Elaboration

- This means a rational individual forms their beliefs through a process of critical thinking rather than accepting ideas based on emotion, tradition, or authority alone.

- The core components are:

- Evidence: This includes verifiable facts, data, and direct observations. The quality and quantity of the evidence are crucial. A rational belief is one that is well-supported by strong evidence.

- Reason (or Logic): This is the process of using sound principles to analyze evidence and draw conclusions. It involves identifying patterns, evaluating arguments, and avoiding logical fallacies.

- In essence, a rational person’s belief system is not static. They are willing to update or even abandon their beliefs when presented with new, compelling evidence that contradicts their current understanding.

- Complete this sentence “A rational person makes decisions based on”

- A rational person makes decisions based on a logical process aimed at achieving their goals.

Old Stuff

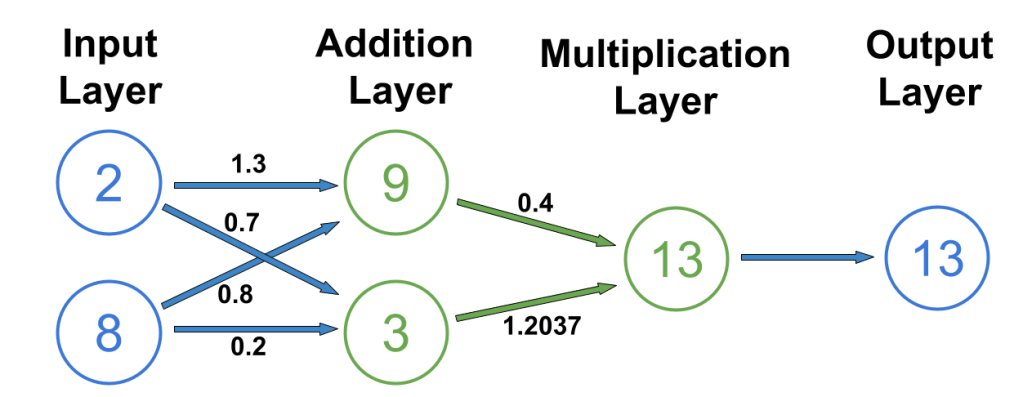

Image Credit: IBM Neural Networks

- A tiny neural network:

- The network calculates the output neuron O from the input neurons J and K.

- The six w‘s are weights.

- The neurons of the Addition Layer, A and B, are calculated from the Input neurons and the weights attached to the blue arrows:

- A = wja x J + wka K

- B = wjb x J + wkb x K

- Neuron M in the Multiplication Layer is the product of neurons A and B and the weights attached to the green arrows

- M = wam x A x wbm x B

- How the network looks like when numbers are assigned to the input neurons and the weights:

- The core idea of neural networks:

- Everything in a neural network is hard-coded except the most important thing: the weights. The weights store learned information, like the synaptic connections between neurons in the brain. Thus a neural network has to learn the numeric values its weights, which it does through machine learning. A neural network is “trained” by a training program.

- The key aspect of neural networks is that they learn like humans do. Brains learn through changes to the synaptic connections between neurons. Neural networks learn through changes to the “weights” attached to the arrows from one neuron to another. So if neuron A performs a calculation whose results is 10, B receives 10 x 1.1 = 11.

- The calculations that neurons like A and B perform are hard-coded into the program. The weights, like 1.1, are not. They result from machine training.

- There’s no straightforward way of programming a computer to identify hand-written digits like these. But computer scientists have devised an ingenious, indirect method for solving problems like this: artificial neural networks, or just neural networks, or simply neural nets.

- A neural network is a computer program designed to operate like the network of neurons in the brain. The program consists of a sequence layers of “neurons” that

- The human brain consists of a network of billions of neurons that interconnect at trillions of synapses. Signals propagate through the network as neurons fire. Learning involves changes in synaptic connections.

- The program consists of an artificial neural network whose “synaptic connections” are numbers called “weights.” Initially the weights are set to random numbers, with the result that at first the program misidentifies most hand-written digits.

- The program consists of a sequence layers of “neurons” that

- A computer image consists of pixels. So the first thing the program would do is convert the image’s pixels into a matrix of numbers. If the image is black-and-white, the numbers represent the shade-of-gray of a each pixel.

- But how would the program figure out that these numbers represent a 0?

- One idea is to scan images of the perfect 0, the perfect 1, the perfect 2, and so on; then convert each image to its corresponding matrix of numbers.

- The straightforward, top-down approach is to do develop an algorithm by thinking hard about the problem. But there’s no simple, pretty algorithm that works.

- The second approach, developed in the 40s and 50s, is to write a program that learns to identify hand-written digits the same way the brain learns things.

- The human brain is a network of 100 billion neurons interconnected at 100 trillion synapses.

- Learning involves changes in the synaptic connections between neurons (synaptic plasticity).

- So the second approach yields a program consisting of an artificial neural network whose “synaptic connections” are numbers called “weights.” Initially the weights are set to random numbers, with the result that at first the program misidentifies most hand-written digits.